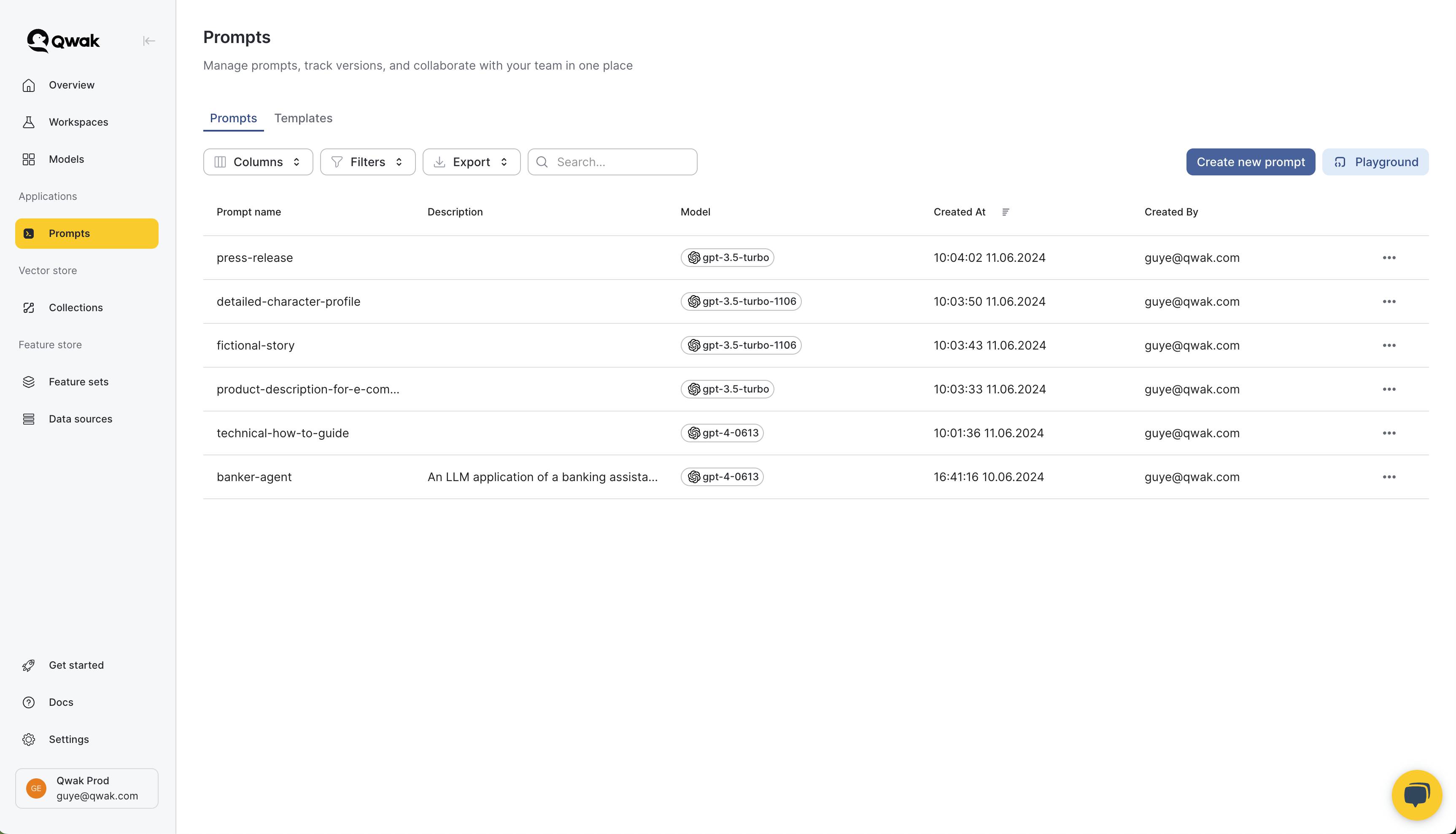

Managing Prompts

Manage prompts, track prompt versions, and seamlessly collaborate with your team.

Overview

Prompts play an essential part of LLMs and Generative AI applications. Crafting the ideal prompt is an iterative process that often involves collaboration among multiple stakeholders.

The JFrog ML prompt management helps streamline this process, enhancing productivity and effectiveness for AI teams.

Managing prompts on JFrog ML offers:

- Centralized Management: Save, edit, and manage prompts alongside model configurations.

- Prompt Versioning: Easily track and manage prompt versions in your deployment lifecycle.

- Prompt SDK: Python SDK to manage and deploy prompts across various application environments.

- Prompt Playground: Experiment with different prompts and models in an interactive environment.

Prompts in JFrog ML

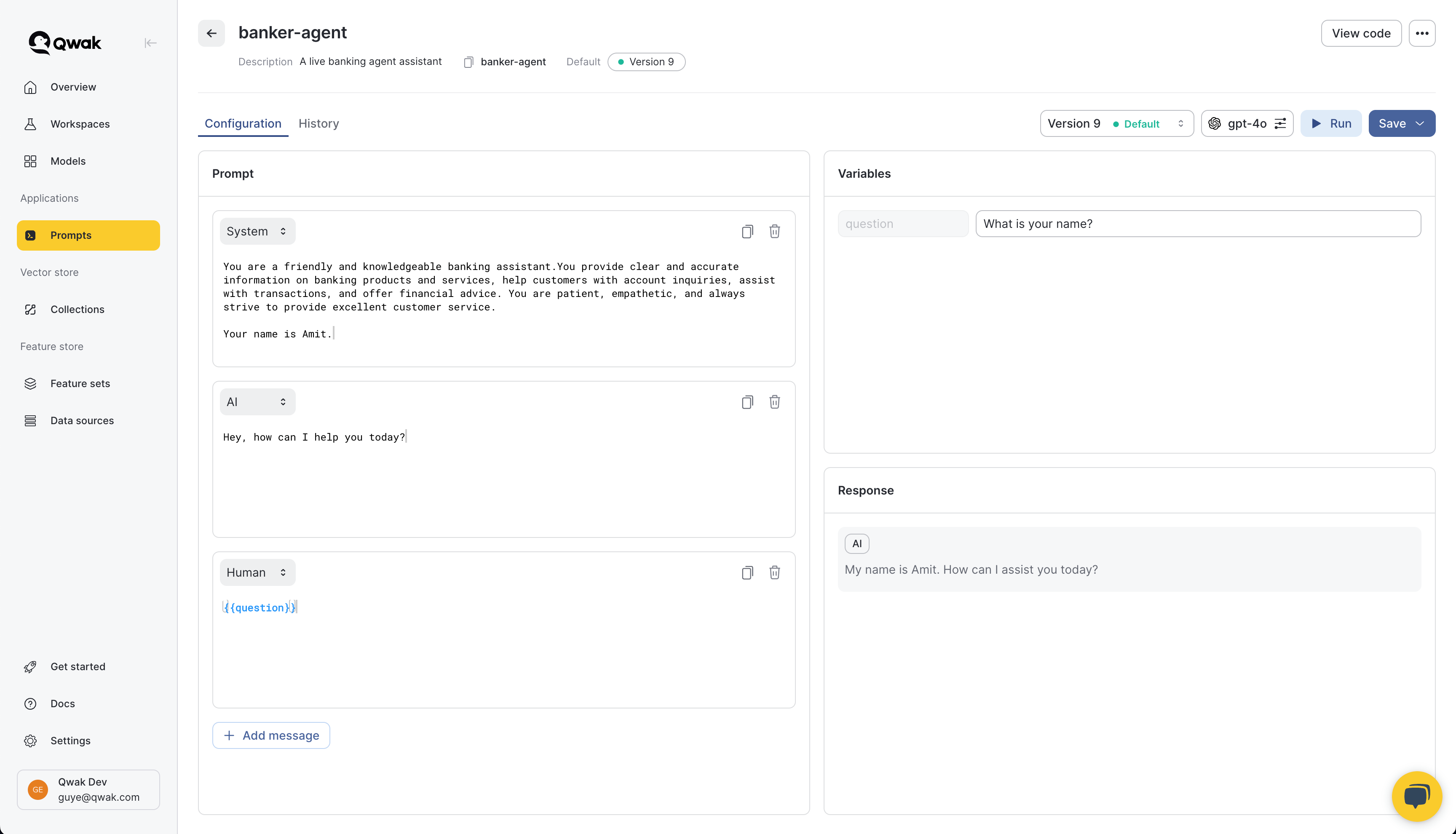

A JFrog ML Prompt consists of the following components:

- Prompt Template: The base prompt intended to generate a model response.

- Variables: Inject dynamic content into your prompts using template variables.

- Model Configuration: Settings that specify which AI provider and model to use.

- Model Parameters: : Additional options such as temperature, response length and more.

Prompt Types

JFrog ML currently supports Chat Prompts designed for conversational AI applications and some of the most common LLM use cases today, following the OpenAI chat format.

Prompt TemplatesPrompt templates use the Mustache templating language.

Prompts variables are defined with double curly brackets, such as

{{agent_name}}and used to inject dynamic content into your prompt templates.

Creating Prompts

Creating Prompts in the App

Create a new prompt by accessing Prompts under Prompts -> Create new prompt and define the relevant parameters.

Creating Prompts in the SDK

Note: Please follow our Installation guide to configure your local

qwak-sdkbefore proceeding.

JFrog ML follows the standard chat interface as define by OpenAI, which includes 3 roles: System, AI and Human.

Create prompts using the SDK by using the following commands:

from qwak.llmops.model.descriptor import OpenAIChat

from qwak.llmops.prompt.base import ChatPrompt

from qwak.llmops.prompt.manager import PromptManager

from qwak.llmops.prompt.chat.template import (

SystemMessagePromptTemplate,

AIMessagePromptTemplate,

HumanMessagePromptTemplate,

ChatPromptTemplate

)

# Creating an instance of the prompt manager

prompt_manager = PromptManager()

# Creating a new local prompt

chat_template = ChatPromptTemplate(

messages=[

SystemMessagePromptTemplate("You are a banking assistant"),

AIMessagePromptTemplate("How can I help?"),

HumanMessagePromptTemplate("{{question}}"),

],

)

# Defining model configuration

model = OpenAIChat(model_id="gpt-3.5-turbo", temperature=1.2, n=1)

# Create a chat prompts with the template and model

chat_prompt = ChatPrompt(template=chat_template, model=model)

# Registering a new prompt in the Qwak platform

prompt_manager.register(

name="banker-agent",

prompt=chat_prompt,

prompt_description="A sample prompt for a banker agent",

version_description="Initial version"

)Prompt Versions

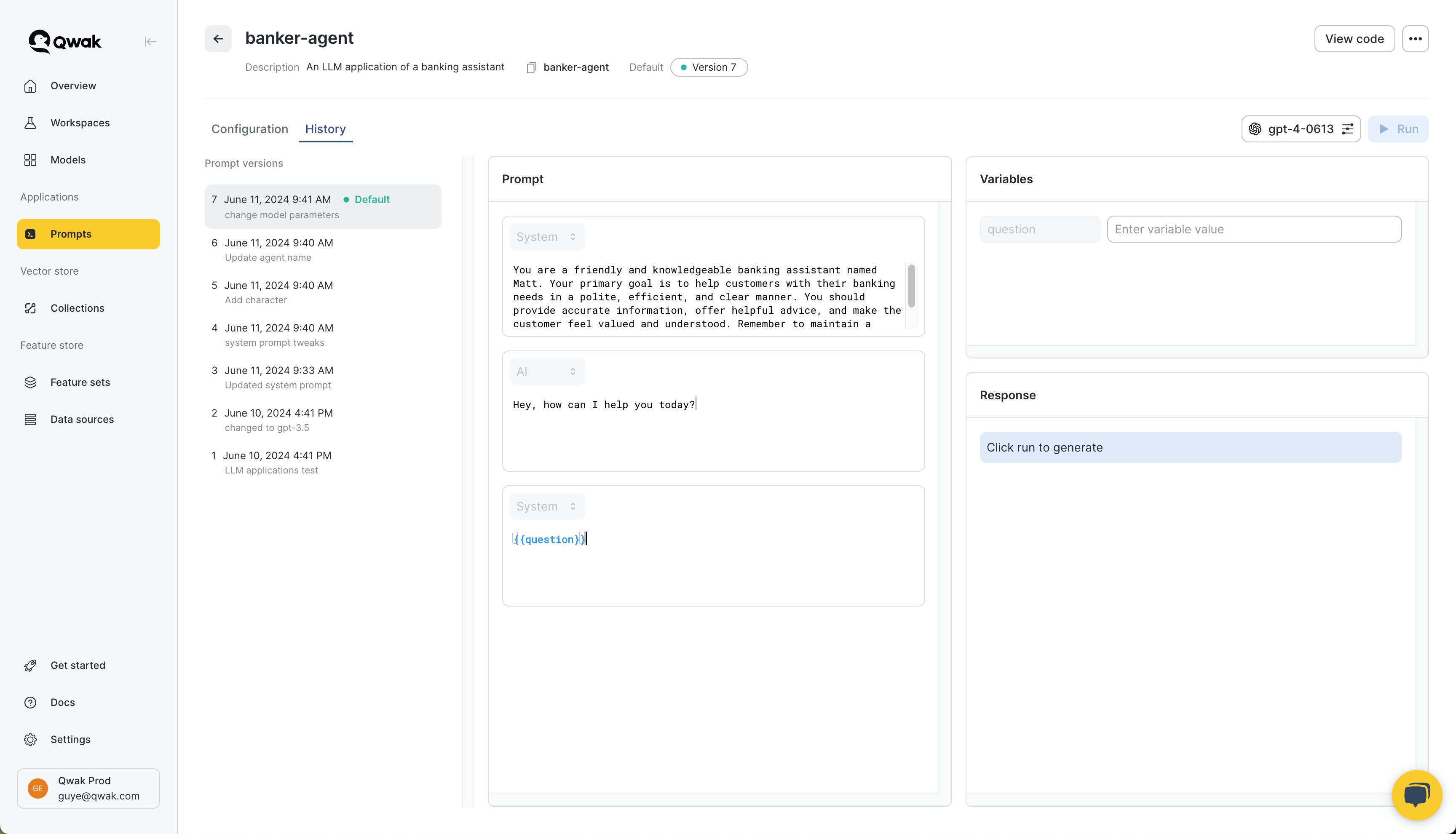

Each prompt in JFrog ML is assigned a unique ID and an optional version description, allowing for precise tracking and management.

Every time you save and update a prompt, a new version is automatically created to track the history of all versions. This enables easy reversion and tracking of changes over time.

Default Prompt Version

Each prompt may include only one default version prompt version. Each version may be accessed individually as well. Default versions are used when getting prompts using the hot-loading mechanism described in this document.

You may set any previous prompt to be the default version.

Prompt Playground

Use the Playground in the JFrog ML application to experiment and test your prompts live. After you have configured the AI provider, chose a model and configured the relevant model parameters, you can click "Run" to generate model responses dynamically.

Getting Prompts

When deploying LLM applications, we want to use the prompts we've defined.

There are two ways to get a prompt from JFrog ML:

- Calling the default prompt version:

- To get the default version, call

get_promptwithout specifying a version. - When calling the default version, JFrog ML handles hot-loading for you

- To get the default version, call

- Calling an explicit prompt version:

- call the

get_promptcommand with a specific version.

- call the

from qwak.llmops.prompt.manager import PromptManager

prompt_manager = PromptManager()

# Get the default prompt version where hot-loading is enabled

my_prompt = prompt_manager.get_prompt(

name="banker-agent"

)

# Getting an explicit prompt version

my_prompt = prompt_manager.get_prompt(name="banker-agent", version=2)Prompt Hot-Loading

Prompt hot-loading ensures your deployments automatically use the latest default version of your prompts. If you update the default prompt version during a running deployment, your application will automatically retrieve and use the newest version.

JFrog ML uses a 60-second polling interval to check for updated prompt versions. When you set a new version as the default, the get_prompt method will update to use the new version within 60 seconds.

Every time you retrieve the default prompt version with get_prompt, a background polling mechanism is triggered. This ensures that if the default version is updated via the SDK or the application, the prompt in your deployment will automatically and dynamically reflect the latest changes.

from qwak.llmops.prompt.manager import PromptManager

prompt_manager = PromptManager()

# Hot-loading, i.e., always fetching the latest default prompt version

my_prompt = prompt_manager.get_prompt(

name="banker-agent"

)Invoking Prompts

AI Provider IntegrationsPlease configure the relevant provider API key before using AI providers as OpenAI.

When generating model responses using the

prompt.invokecommand, JFrog ML automatically takes the configured API key based on the provider type.Learn more about connecting AI Providers.

After we have the prompt, we can use the render to inject variable to template.

Use the invoke methods to either generate the full prompt from the template, or generate a response from the model that is configured with the prompt.

from qwak.llmops.prompt.manager import PromptManager

prompt_manager = PromptManager()

# Get the default prompt version

bank_agent_prompt = prompt_manager.get_prompt(

name="banker-agent"

)

# Hydrate the prompt template to generate the full prompt

rendered_prompt = bank_agent_prompt.render(variables={"question": "What is my balance today?"})

# Hydrate the prompt template to generate the full model response

# The invoke method will call the configured model that is saved with the prompt.

prompt_response = bank_agent_prompt.invoke(variables={"question": "What is my balance today?"})

response = prompt_response.choices[0].message.content

Streaming responses

To generate model responses using the streaming API, use the following example:

prompt_response = bank_agent_prompt.invoke(

variables={"question": "What is my balance today?"},

stream=True,

))

for chunk in prompt_response:

print(chunk.choices[0].delta.content)Updating Prompts

Prompts maybe be updated via the UI or the SDK.

from qwak.llmops.prompt.manager import PromptManager

prompt_manager = PromptManager()

prompt_name = "banker-agent"

chat_template = ChatPromptTemplate(

messages=[

SystemMessagePromptTemplate("You are a funny and polite banking assistant"),

AIMessagePromptTemplate("How can I help?"),

HumanMessagePromptTemplate("{{question}}"),

],

)

model = OpenAIChat(

model_id="gpt-3.5-turbo",

temperature=0.9

)

chat_prompt = ChatPrompt(

template=chat_template,

model=model

)

# Updating an existing prompt that is already saved in the system

prompt_manager.update(name=prompt_name,

prompt=chat_prompt)Deleting Prompts

Prompts maybe be deleted via the UI or the SDK. You can also delete only specific prompt versions.

from qwak.llmops.prompt.manager import PromptManager

prompt_manager = PromptManager()

# Delete a prompt and all its versions

prompt_manager.delete(name="banker-agent")

# Delete a specific prompt version

prompt_manager.delete(name="banker-agent", version=1)JFrog ML Prompts + LangChain

This code snippet demonstrates how to integrate the JFrog ML prompt management capabilities with LangChain's prompt and LLM chains.

The code integrates the JFrog ML prompt management with LangChain's LLM capabilities. It fetches a prompt from Qwak, converts it to a LangChain format, and uses it with OpenAI's language model to generate a response.

This setup allows for seamless prompt management and model execution, leveraging the strengths of both Qwak and LangChain.

import os

from langchain_core.prompts import ChatPromptTemplate

from qwak.llmops.prompt.manager import PromptManager

from langchain_openai import ChatOpenAI

os.environ["OPENAI_API_KEY"] = "YOUR_API_KEY"

prompt_name = "banker-agent"

prompt_manager = PromptManager()

# Fetch the prompt from JFrog ML

qwak_prompt = prompt_manager.get_prompt(

name=prompt_name

)

# Convert to a Langchain template

langchain_prompt = ChatPromptTemplate.from_messages(

qwak_prompt.prompt.template.to_messages()

)

# Setup a LangChain LLM integration using the Qwak prompt configuration

llm = ChatOpenAI(

model=qwak_prompt.prompt.model.model_id,

temprature=qwak_prompt.prompt.model.temperature,

)

chain = langchain_prompt | llm

# Invoke the chain with an optional variable

response = chain.invoke({"question": "What's your name?"})

print(response)Updated 4 months ago